ChatGPT, Social Engineering, and the moving goal posts in the AI arms race.

Scene

You have a new connection request.

You awake in the morning to find a new notification on your phone. It’s a connection request from someone on LinkedIn. You’ve never heard of this person before but considering that it’s a social network for working professionals – you start going through your verification process.

Do I know this person?

If not, is it someone that I should add?

Are they a threat? Are they a bot?

Most of us have been trained to answer these questions from our first days on the internet (don’t talk to strangers!) – but that last one is something that’s relatively new in our networked interactions. In this new misinformation age, where troll-farms & criminal outfits operate with the intent of everything from derailing national elections to flaming competitors products — we’ve slowly been trained to look for markers to verify the validity and humanity of our connections:

Is this a human? Do they walk like one? Do they talk like one?

But what happens when a tool like Chat-GPT, a tool that can generate everything from poems to manifestos based off a single prompt – continues to proliferate into the hands of millions?

They say 2023 is the year that AI will continue to disrupt more industries, what does it mean for our personal security on the Internet.

If you’ve been on the internet in the last couple of weeks, you’ve probably seen the explosion of Artificial Intelligence (AI) based tools.

And before you ask, no, I am not using ChatGPT or any of those tools as a cheeky way to write this blog post. This post is written from a 100% certified, Grade-T Human meatsack.

A recap:

In the final throes of 2022, a handful of AI based tools shot into popularity.

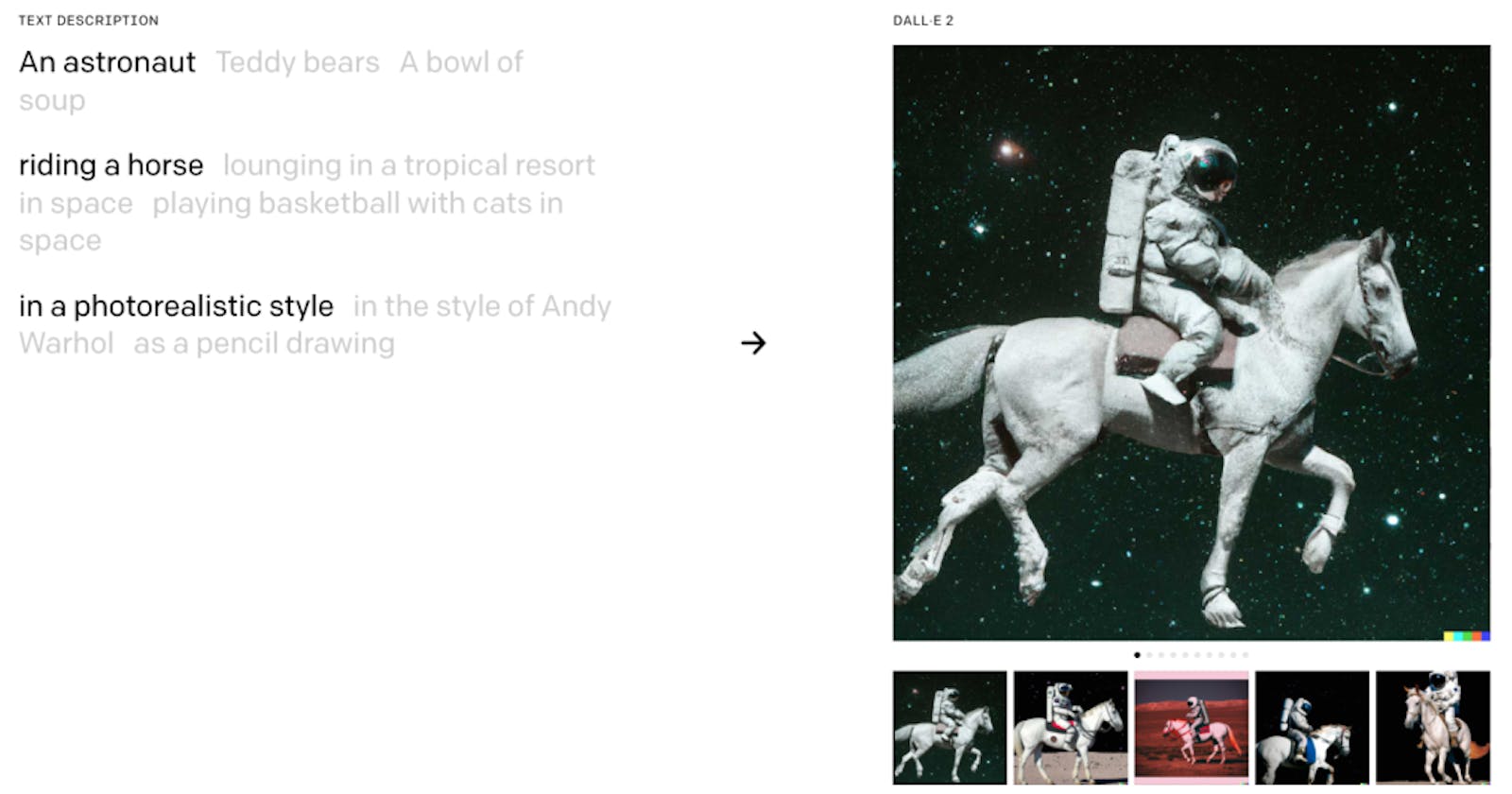

Some are focused on art, such as dall-e, starryai and lensai.

Dall-e will take in user prompts (“An astronaut, riding a horse, in a photorealistic style“) and generate artwork that fits those prompts.

A snippet from Dall-e 2’s Home Page. This page also allows the reader to try clicking on the other variations of the prompts to see how the artwork changes.

The latter, such as starryai and lensai – are more focused

towards refining provided images as input. You may have seen this recently in your social networks with friends posting impressive artistic self portraits.

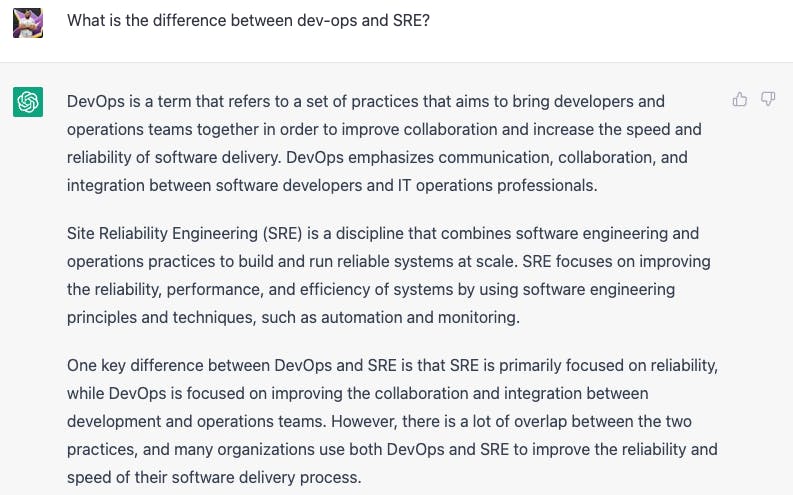

Others, such as ChatGPT, are more focused towards using AI with text. Specifically, using language processing models for things like text generation, language translation, text summarization and sentiment analysis.

Some notable examples include people asking ChatGPT to create custom poems, write (and fix their) code and even generate copy for their marketing or blog posts (again, not me).

As an example for this blog post, here’s a prompt that was noodling in my head from the most recent podcast I’d listened to.

So, how does an AI helping provide custom output to prompts pose a security risk?

Security Considerations

Security is an arms race. For every attack or sword 🗡, there will eventually be a defence or shield🛡

..until the attackers come up with a bigger sword which will require defenders to build a better shield and so on and so forth.

There are many ways to attack a target that is connected to the internet. However one of the most dangerous and bountiful is exploting the weakest link – humans. This method of attack is known as social engineering.

[…] Social engineering is the psychological manipulation of people into performing actions or divulging confidential information. A type of confidence trick for the purpose of information gathering, fraud, or system access, it differs from a traditional “con” in that it is often one of many steps in a more complex fraud scheme.

To carry out this sort of attack

requires an entrypoint or vector. Whereas in less “human” attacks this could be a exploting an insecure port or a poorly compartmentalized process; social engineering usually occurs via interactions.

This sort of attack can be stopped “at the door” by default blocking any interactions from people that are not friends. Indeed, this appears to thankfully become the “on-rails” setup experience for most new apps.

Attack: Interactions from malicious actors

Defence: Default block interactions from strangers.

However, what if this is not the default? Indeed, the main draw for social networks is the social aspect of connecting with people right?

This is where the friend-or-foe verification process comes into play and where tools like chat-GPT can be problematic.

Most people are quite good at blocking friend requests on more “personal” social media (i.e. Facebook, Instagram) if they can’t recognize the connection on first glance.

The primary verification test for more personal social media is usually:

Do I know this name?

Does this picture look familiar?

The secondary verification test, which occurs based on a looser security posture and upon failure of the primary test, is then to check if there are any common connections.

- Does anyone else on my friends list know this person?

That is to say that least acceptable test for verification in more personal social networks is peer verification.

If a bunch of people I know seem to think this person is legit, then they must be.

Attack: Friend requests from malicious actors on personal social networks.

Defence: Verify validity of connection by ID via name, picture. OR verify via peer verification.

But what about when it’s a setting like LinkedIn? That site is unique because it’s a “less personal” site meant for networking amongst professionals. Just like attending a job-fair or conference – it’s expected that you may get requests from people that you are not immediately familiar with but are reaching out for professional reasons. Perhaps maybe that connection request is from someone that’s reaching out to head-hunt for a slick new role.

In these less personal settings, most people relax their security posture lowering the bar for verification. Here, the name and picture check could be bypassed entirely for validation by their network and industry.

Sure this name and picture might not immediately click, but perhaps I ran into them at a conference. Are they in my industry? And are they connected to any other peers?

The issues from this scenario are as follows:

All it takes is for one bad actor’s connection request to be “accepted” by a person and they can now start sending connection requests to other members of the original acceptors professional network.

Every additional connection request that’s accepted continues to add credibility.

For this attack, an attacker simply needs to create enough fake profiles (‘bots’) to get through, and they are betting on the fact that the transactional nature of the network will not lead to people “reference-checking” each other on new connections.

Hey, I see that you accepted a conection request from X, do you know this person?

A defence for this exploit is to obviously screen connection requests more carefully.

Sure, this person appears to be known by others in my network – but do they seem to be legitimate?

As an aside, you can a great example of this type of social engineering in the story of Anna Delvey (the inspiration for the Netflix show “Inventing Anna“).

She was able to con her way into networking with elite socialites and swindle hundreds of thousands of dollars. She did this by exploting the transactional nature of those relationships to pass as legitimate.

“Well obviously she’s at this big party, someone must have vouched for her”

“I don’t know her, but she appears to be close with X, therefore she must be legit”

By (1) appearing to belong and (2) trusting that the rest of their peer-network had done their due dilligence, Anna was able to gain a foothold within the social network and continue exploiting connections for personal gain.

Attack: Friend requests from malicious actors on professional social networks.

Defence: Verify ID via peer acceptance and behaviour.

For most, this is where a credibility test comes into play trying to determine whether an incoming friend request is a legitimate and human:

“They are in my industry and professional network: do they act like a member of that network? What are the indicators?”

Do they interact with others?

Do they interact with others posts?

Do they write posts?

In these cases, custom content and interactions are the most reputable indicator.

If I’m assessing the credibility of a stranger that appears to be accepted by my network, interactions and custom content are the best indicators of credibility.

The check is for effort. How much effort could a malicious actor be putting into attack you. Most of the time, it’s little.

Therefore, these are the most reputable indicators because it requires the attacker to put in significantly more effort to gain context and create posts and interactions. Whereas fake bot accounts can easily be created, and generic posts can scripted with a bit more effort – crafting genuine content that can only come from someone that’s actually within that industry is considerably harder.

Well… until now.

Enter, a tool like ChatGPT. With ChatGPT, an attacker would be able to much easily create posts, comments and content that is both contextually accurate and very hard to distinguish from a human.

Imagine that you work in Cloud Infrastructure and you get a connection request from someone. You don’t know them, but they appear to be in the same industry.

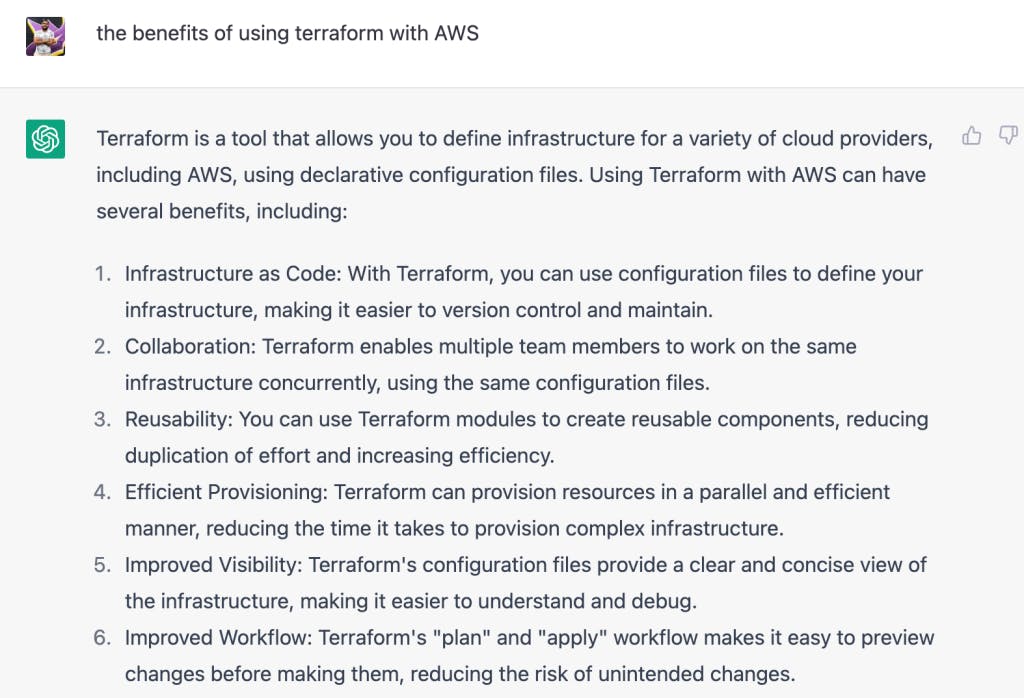

You see that they are active in writing posts. They appear to be involved with Terraform and so have a blog post named “The benefits of using Terraform with AWS“.

AI tools can easily create these types of blog posts.

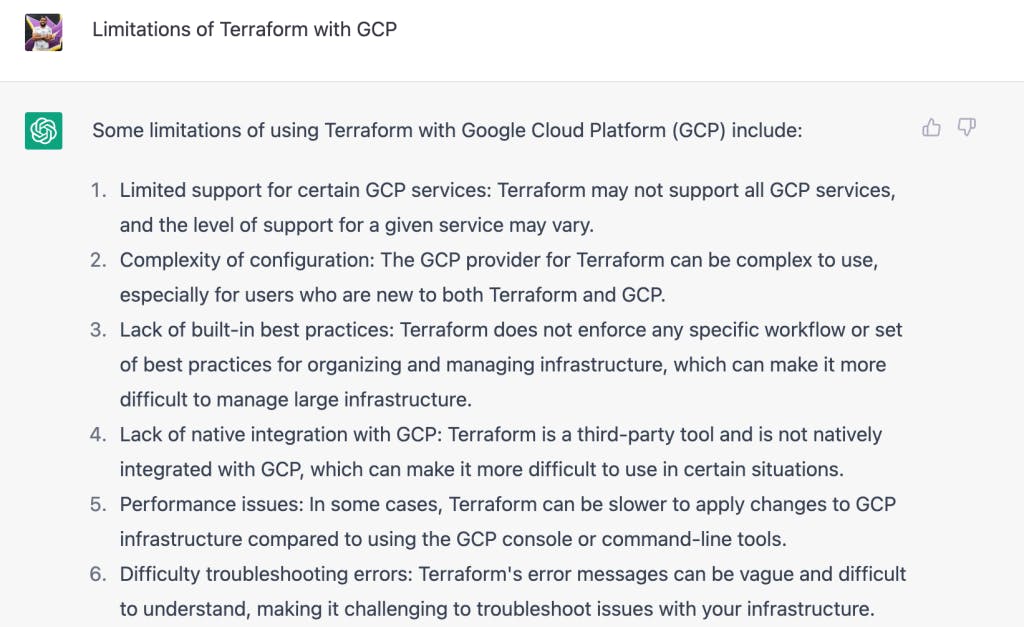

You even see that they appear to leave comments on a peer’s post about why Terraform sucks with GCP.

AI tools can again, easily create the text for these sorts of interactions.

Sure while a more trained Infrastructure Engineer might be able to spot little quirks or issues in the content upon more detailed scrutiny, could this sort of content pass the first glance?

Unfortunately, I believe the answer is yes. A problematic scenario I imagine is an attacker, armed with cursory knowledge and basic wordsmithing skills being able to use a tool like ChatGPT to pass the Friend or Foe (or peer) test. Attackers can use these tools to aid in interactions and more easily masquerade as a member of an industry towards gain access to a professional network and exploitation of the people therein. The worst-case scenario is if the attacker was not even in the loop after start and where a program could be written to script content and interactions using ChatGPT. Essentially, imagine the existing problem that exists at scale with low-effort bots, and combine that with the power of ChatGPT.

Seemingly overnight, these AI tools have just become a new tool for attackers to utilize.

Defence

But it’s not all doom and gloom. In fact within a couple of weeks there are already tools being created to detect AI generated posts, some using AI themselves. An example of this being: GPTZero.

But as with any security posture, I believe the defence is not just one – tool, but a layered approach.

How can I strengthen my security posture against social engineering?

Do not accept interactions from anyone that’s not a friend or a friend of friend.

If you receive a friend request from someone that appears to be associated by another connection – do a a casual reference check. “Hey do you know this person?”

If on a less personal network, check the quality of the interactions. (Do these comments make sense? Do they appear to have relevant context?) Perhaps run that content through a tool like GPTZero.

Directly reach-out to them to assess validity.

Social Engineering capitalizes on our human tendency to want to connect with others without scrutinizing every detail. The best guard against this is to maintain vigilence, defend in depth and perhaps use the very same technologies (AI) to fight fire with fire.